Balancing the risk and reward of ChatGPT – as a large language model (LLM) and an example of generative AI – begins by performing a risk assessment of the potential of such a powerful tool to cause harm. Consider the following.

ChatGPT training

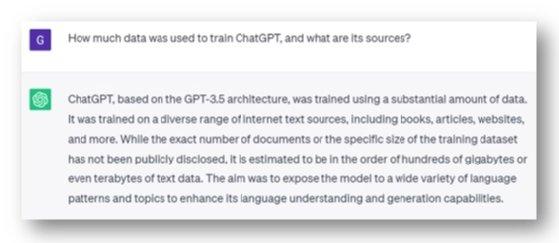

Italy and Germany made ChatGPT headlines: “OpenAI allegedly processed personal data without informing users and individuals and may lack a legal basis for the extensive data collection used to train its AI models.” According to ChatGPT, it was trained on a diverse range of internet sources, where OpenAI may argue that the training data is in the public domain. This argument may not fly.

For example, Clearview AI once offered facial recognition services to Canadian law enforcement agencies and private sector entities. However, the Privacy Commissioner of Canada and several provincial privacy offices found that it had breached federal and provincial privacy laws by collecting publicly accessible online images of individuals (and children) without their knowledge or consent, with the data used in ways unrelated to their original purpose. In other words, just because some data are public does not make them a free-for-all. Since no authorisation will have been granted for a new purpose for using sensitive personal data, this was seen as a breach.

There is also security risk from intentional corruption of training data that would affect ChatGPT’s responses.

Response: Stiffer regulations with consequences for non-adherence? Increased AI transparency? Oversight of training data processes? Greater collaboration balanced between technology owners and public interest groups? As detailed in an Al Jazeera article concerning generative AI’s societal risks: “Sam Altman, the chief executive of ChatGPT’s OpenAI, testified before members of a Senate subcommittee… about the need to regulate the increasingly powerful artificial intelligence technology being created inside his company and others like Google and Microsoft.”

ChatGPT prompts

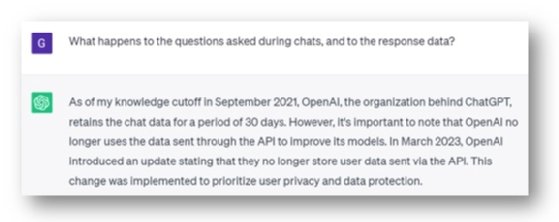

Open AI (ChatGPT creator) once stored chat data for 30 days, some of which was used to improve its models. According to ChatGPT, OpenAI stopped doing this from March 2023, “to prioritise user privacy and data protection”.

Furthermore, chat data captured before March 2023 was subject to a 30-day retention policy. Note: OpenAI’s data usage and retention policies should be studied as part of a proper cyber risk assessment rather than depending on an answer from ChatGPT.

Another cyber security risk is similar to giving sensitive corporate data to an unknown entity, as when a user submits sensitive corporate data to ChatGPT for analysis. This is a breach, and third-party risk exacerbates the extent of the breach. The risks include passing data to a third-party system without appropriate due diligence and not assigning appropriately monitored system-level authorities for it.

And what about third-party tools that use OpenAI as a platform? This abstraction raises risks such as contradictory policies between the platform and the tool and differences in chat and response storage approaches. This means performing due diligence not only on OpenAI but on the abstraction tool, too. There is also the security risk of a prompt being modified or otherwise redirected once submitted at any level, resulting in returning or performing unintended actions.

Response: Update and communicate corporate data security policies. Check access controls to ensure their suitability. Enhance/apply third-party due diligence processes. Also, just like the differentiation between public cloud and private cloud, public chatbots may evolve to private chatbots to enable the more secure release of ChatGPTs capabilities in corporates.

ChatGPT responses

ChatGPT can be used by the unsavoury sort to have email or telephone conversations that are of such quality that they create trust with a vulnerable human target to hand over the keys to their bank account or to expose their national identification details. ChatGPT makes social engineering – one of the greatest cyber risks – easy by making it harder to identify whether a conversation is legitimate.

Today, cyber security awareness courses teach one to check for spelling, grammar, and for conversational anomalies as a means to identify phishing emails. For telephone calls, such training may cover asking for the credentials of the caller and to verify them. However, for the average person, if the typical red flags don’t come up, how many may be led to incorrectly believing those conversations to be trustworthy?

There is also the risk of an over-dependence on ChatGPT responses, especially when applied in a security setting, amplifying the training data and prompt risks raised earlier. Putting the responses into action without verification may therefore expose an organisation to new security risks.

Response: For emails, one red flag remains key: the originating email domain. Check telephone numbers and validate caller identity. Also, corporate cyber security awareness training should cover conversational AI tools. Validate ChatGPT responses in critical scenarios before acting on them. Critical thinking is more important than ever.

Conclusion

This article explored three groups of cyber security issues emerging from the use of ChatGPT. The nascent nature of ChatGPT means that it is expected to change quickly and to keep on changing. Not keeping up with these changes will introduce more risks and have some controls outliving their usefulness. As always, the risk control for control effectiveness is their frequent revision, especially in rapidly evolving environments.

Former Google CEO Eric Schmidt warns that today’s AI systems will soon be able to perform zero-day exploits, and that the US is unprepared for a world of AI. Ultimately, there is a whole new world of cybersecurity issues to come – corporate and sovereign – as we use a new generation of AI tools. While there is much we don’t know, it is best to be proactive in the face of these new threats while generative AI is still in its infancy.

Guy Pearce is a member of the ISACA Emerging Trends Working Group.

Stay connected with us on social media platform for instant update click here to join our Twitter, & Facebook

We are now on Telegram. Click here to join our channel (@TechiUpdate) and stay updated with the latest Technology headlines.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.