To assist humans during their day-to-day activities and successfully complete domestic chores, robots should be able to effectively manipulate the objects we use every day, including utensils and cleaning equipment. Some objects, however, are difficult to grasp and handle for robotic hands, due to their shape, flexibility, or other characteristics.

These objects include textile-based cloths, which are commonly used by humans to clean surfaces, polish windows, glass or mirrors, and even mop the floors. These are all tasks that could be potentially completed by robots, yet before this can happen robots will need to be able to grab and manipulate cloths.

Researchers at ETH Zurich recently introduced a new computational technique to create visual representations of crumpled cloths, which could in turn help to plan effective strategies for robots to grasp cloths and use them when completing tasks. This technique, introduced in a paper pre-published on arXiv, was found to generalize well across cloths with different physical properties, and of different shapes, sizes and materials.

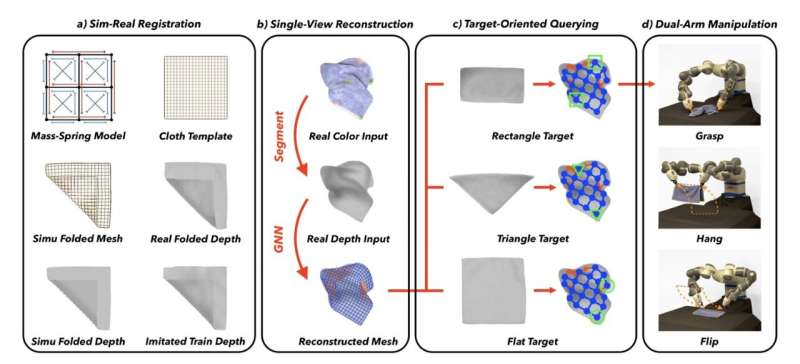

“Precisely reconstructing and manipulating one crumpled cloth is challenging due to the high dimensionality of the cloth model, as well as the limited observation at self-occluded regions,” Wenbo Wang, Gen Li, Miguel Zamora, and Stelian Coros wrote in their paper. “We leverage the recent progress in the field of single-view human body reconstruction to template-based reconstruct the crumpled cloths from their top-view depth observations only, with our proposed sim-real registration protocols.”

To reconstruct entire meshes of crumpled cloths, Wang, Li and their colleagues used a model based on graph neural networks (GNNs). These are a class of algorithms meant to process data that can be represented as a graph.

To train their model, the researchers compiled a dataset containing more than 120,000 synthetic images sourced from simulations of cloth meshes and rendered top-video RGBD cloth images, as well as more than 3,000 labeled images of cloths captured in real-world settings. After substantial training on these two datasets, the team’s model was found to effectively predict the positions and visibility of entire cloth vertices just by viewing the cloths from above.

“In contrast to previous implicit cloth representations, our reconstruction mesh explicitly indicates the positions and visibilities of the entire cloth mesh vertices, enabling more efficient dual-arm and single-arm target-oriented manipulations,” Wang, Li and their colleagues wrote.

To evaluate their model’s performance, the researchers carried out a series of tests, both in simulation and in an experimental setting. In these tests, they applied their model to the ABB YuMi robot, a humanoid robotic bust with two arms and hands.

In both simulations and experiments, their model was able to produce mesh representations of cloths that could effectively guide the movements of the ABB YuMi robot. These meshes allowed the robot to better hold and manipulate various cloths, whether using a single hand or both.

“Experiments demonstrate that our template-based reconstruction and target-oriented manipulation (TRTM) system can be applied to daily cloths with similar topologies as our template mesh, but have different shapes, sizes, patterns, and physical properties,” the researchers wrote.

The datasets compiled by the researchers and their model’s code are open-source and can be accessed on GitHub. In the future, this recent work could pave the way for further advances in the field of robotics. Most notably, it could help to advance the capabilities of mobile robots design to assist humans with chores, improving these robots’ ability to handle tablecloths and various other cloths commonly used for cleaning.

More information:

Wenbo Wang et al, TRTM: Template-based Reconstruction and Target-oriented Manipulation of Crumpled Cloths, arXiv (2023). DOI: 10.48550/arxiv.2308.04670

© 2023 Science X Network

Citation:

A technique to facilitate the robotic manipulation of crumpled cloths (2023, September 4)

retrieved 4 September 2023

from https://techxplore.com/news/2023-08-technique-robotic-crumpled.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Stay connected with us on social media platform for instant update click here to join our Twitter, & Facebook

We are now on Telegram. Click here to join our channel (@TechiUpdate) and stay updated with the latest Technology headlines.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.