To successfully cooperate with humans on manual tasks, robots should be able to grasp and manipulate a variety of objects without dropping or damaging them. Recent research efforts in the field of robotics have thus focused on developing tactile sensors and controllers that could provide robots with the sense of touch and bring their object manipulation capabilities closer to those of humans.

Researchers at the Bristol Robotics Laboratory (BRL)’s Dexterous Robotics group, Pisa University and IIT recently developed a tactile-driven system that could allow robots to grasp various objects gently and more effectively. This system, introduced in a paper pre-published on arXiv, combines a control scheme that enables force sensitive touch with a robotic hand with an optical tactile sensor on each of its fingertips.

“The motivation of this work stems from the collaboration between the Dexterous Robotics group at the BRL and researchers at Pisa University and IIT,” Chris Ford, one of the researchers who developed the tactile system, told Tech Xplore. “Pisa/IIT have a unique design of robot hand (the SoftHand), which is based on the human hand. We wanted to combine the Pisa/IIT SoftHand and the BRL TacTip tactile sensor, as the two technologies complement each other due to their biomimetic nature.”

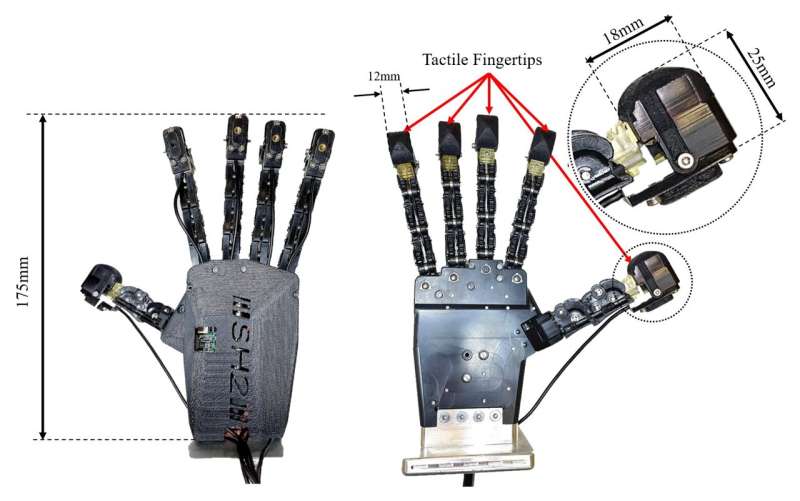

The SoftHand is a robotic hand that resembles human hands in both its shape and function. Originally developed as a prosthetic tool, this hand can grasp with the same postural synergy as human hands.

For the purpose of their study, Ford and his colleagues integrated one optical tactile sensors on each of the fingertips of a SoftHand. They used a sensor called TacTip, which can extract information from a 3D printed tactile skin with an internal structure that resembles the structure of human skin.

“Our belief is that the combination of these traits is key to humanlike dexterity and manipulation capabilities in robots,” Ford said. “We first explored this possibility in a paper published in 2021, which saw the fingertip sensor integrated onto one digit of the Pisa/IIT SoftHand and benchmarked. The natural continuation of this work was to integrate sensors into all digits and install the hand on a robotic arm to complete some grasping and manipulation tasks using tactile information from the fingertips as sensory feedback.”

The main objective of the recent work by Ford and his colleagues was to further explore the potential of the SoftHand-based system introduced in their previous work, but adding TacTip sensors on its fingertips. Integrating this updated version of their system with an advanced control framework, they hoped to reproduce human-like, force-sensitive and gentle grasping.

The new controller introduced in their paper works by measuring the deformation of the soft tactile skin on each of the SoftHand’s fingertips. This deformation serves as a feedback signal that the controller uses to adjust the force that the hand is applying to the object its grasping.

“This is unique compared to more traditional grasp control methods such as controlling motor current, which can be inaccurate when applied to grippers with a ‘soft’ structure, such as the SoftHand,” Ford explained. “Another unique feature of the controller is that it uses feedback from 5 high-resolution optical tactile sensors. Optical tactile sensors use a camera to monitor changes in the tactile skin and are beneficial due to the large amount of tactile information they capture due to their higher resolution, as every pixel of the image is a node containing tactile information. For a tactile image at a 1080p resolution, this translates to over 2 million tactile nodes.”

The use several optical sensors at once would typically require extensive computational power, as a single computer needs to simultaneously capture high-resolution images from different cameras to collect tactile information at a reasonable speed. To reduce the computational load associated with their system, Ford and his colleagues developed a parallel-processing hardware “brain” that can collect images from several sensors simultaneously. This greatly improved the reaction times of their grasp controller, allowing it to achieve human-like performances.

“The results of this work show that we can take complex tactile information with a sophistication close to human touch from multiple fingertips and consolidate it into a simple feedback signal which can be used to successfully apply stable, gentle grasps to a wide range of objects regardless of geometry and stiffness without the need for complex tuning,” Ford said. “Another achievement is the development of the hardware ‘brain’ used to capture and process tactile data from multiple high-resolution sensors at the same time.”

The integration of several sensors significantly improved the tactile and sensing capabilities of the researchers’ SoftHand-based robotic system. By combining it with their parallel-processing hardware and sophisticated controller, the team also improved its ability to grasp different types of objects in suitable ways and without undesirable delays associated with the processing of sensor data.

“We want to capture as much tactile information as possible, therefore data must be captured at as high a resolution as possible, however this quickly becomes process intensive particularly when you start introducing multiple sensors into the system,” Ford said. “Having a scalable, hardware-based solution which allows us to navigate this problem is very helpful when using optical tactile sensors on multi-fingered hands.”

In the future, the new tactile-driven robotic system created by this team of researchers could be integrated on humanoid robots, allowing them to handle fragile or deformable objects while collaborating with humans on different tasks. Although Ford and his colleagues so far primarily tested their system on tasks that require the gentle grasping of objects, it could soon also be applied to other grasping and manipulation scenarios.

“The resolution of the tactile data we can capture from these sensors is approaching human tactile resolution, therefore we believe that there is a lot more information we can extract from the tactile images which will allow for more complex manipulation tasks,” Ford added.

“Consequently, we are currently developing some more sophisticated methods to resolve the overall force of the grasp and gain an in-depth understanding of the nature of the contact at each fingertip more accurately. Our hope is that maximizing the potential of these sensors in their integration with anthropomorphic hands will lead to robots with dexterous capabilities comparable to those of humans.”

More information:

Christopher J. Ford et al, Tactile-Driven Gentle Grasping for Human-Robot Collaborative Tasks, arXiv (2023). DOI: 10.48550/arxiv.2303.09346

© 2023 Science X Network

Citation:

A framework to enable touch-enhanced robotic grasping using tactile sensors (2023, April 10)

retrieved 10 April 2023

from https://techxplore.com/news/2023-04-framework-enable-touch-enhanced-robotic-grasping.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Stay connected with us on social media platform for instant update click here to join our Twitter, & Facebook

We are now on Telegram. Click here to join our channel (@TechiUpdate) and stay updated with the latest Technology headlines.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.